Prompting for Dummies (with Law Degrees)

Another episode in my ongoing series "Arguments with Algorithms"

My Latest Obsession

So I’m staring at a ChatGPT conversation that's useless AF. I'd asked it to help me understand the implications of a new data privacy regulation, and it gave me the most generic response imaginable – basically a Wikipedia summary that I could have found in 30 seconds. Meanwhile, I'm thinking: "I need you to break down how THIS specific law affects my clients' business operations, not give me a textbook definition."

Sound familiar? If you're a lawyer who's tried to wrestle with AI tools, you know the pain. These things are incredibly powerful, but they're also like brilliant interns who've never set foot in a law school. They know everything and nothing at the same time.

But we all know now - the problem isn't the AI. The problem is that lawyers don't know how to talk to AI.

We're trained to be precise, to anticipate counterarguments, to provide context and authority. But when it comes to prompting AI, most of us are throwing legal spaghetti at the wall and hoping something sticks.

So I did what any reasonable person would do: I decided to build a better way to prompt AI for legal work. Because apparently, having one side project wasn't enough chaos in my life.

Stuff I learnt

My old approach – basically just asking a generic LLM to "highlight the legal issues" – got me a generic response that mentioned some vague privacy concerns and called it a day.

So here's what I've discovered after countless late-night AI wrestling matches on ways to prompt-engineer an AI tool - if you don’t want to shell out the extra cash for a specific legal-trained AI product (and there are many many out there), read on.

The Hypothetical Scenario Approach: There's a specific framing technique that transforms how AI responds to legal questions. Instead of asking direct questions like "What are the privacy law issues with employee monitoring?", I use hypothetical scenario prompting: "If we were to implement keystroke monitoring software for remote sales employees in California, what would be the compliance advice we'd need in order to avoid CCPA violations and achieve our goal of monitoring productivity without legal exposure?" This approach forces the AI to automatically adopt the right professional persona and think through practical steps rather than giving textbook explanations.

The Precision Context Method: The second technique involves what I call surgical specificity in your prompts. Rather than broad questions, you structure your request like you're briefing a junior associate: set the precise legal context upfront, ask layered questions that build on each other, and specify exactly what kind of analysis you need. For example: "Assume I'm a California employer implementing keystroke monitoring software for remote workers in sales roles. Walk me through the specific CCPA notice requirements, then identify the three biggest litigation risks, and finally give me a compliance checklist prioritized by legal exposure." This systematic approach treats AI like a skilled research assistant rather than a magic eight ball, and the difference in response quality is remarkable.

What I Built

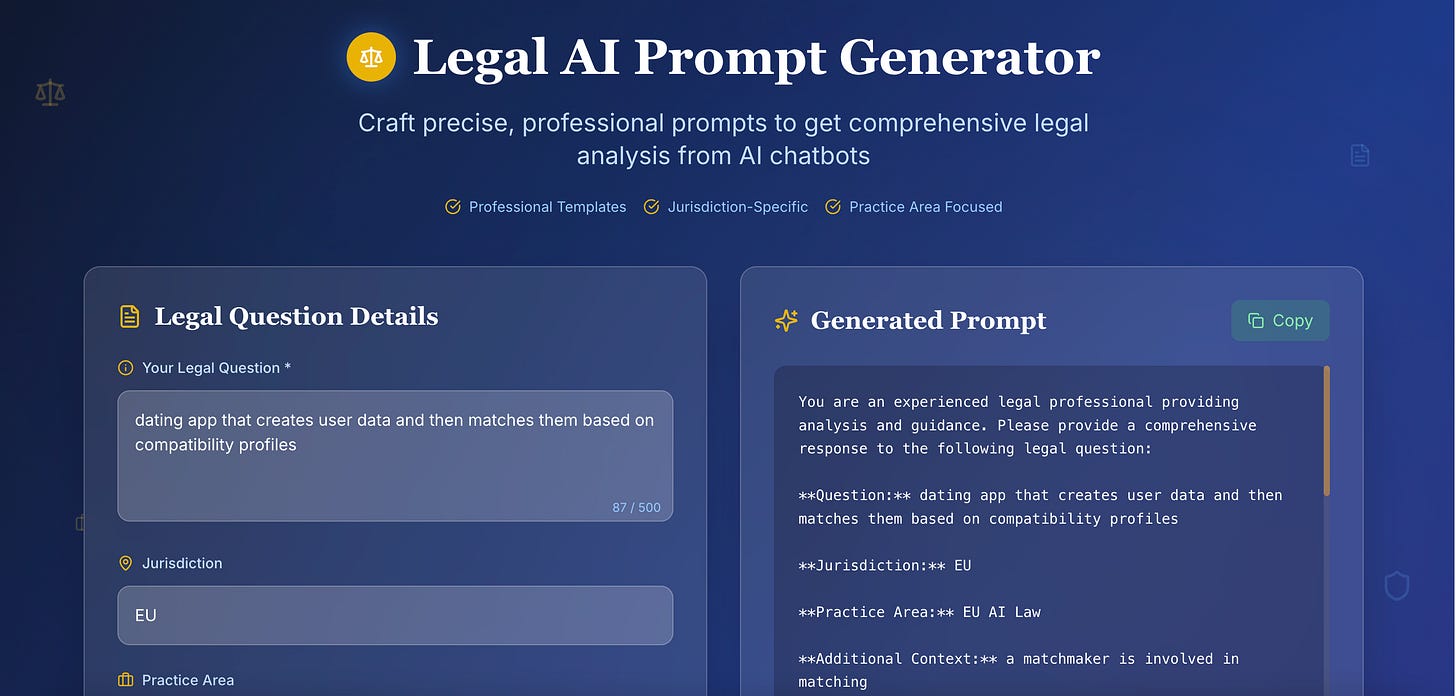

Meet my latest creation: a Legal AI Prompt Generator that lives at legalprompt.replit.app. It's not just another "here's some prompts" website – it's a systematic approach to getting AI to think like a lawyer.

Instead of starting with a blank prompt box and hoping for the best, you select your practice area, define your specific need, and the system generates a prompt that you could actually use to generate something useful.

My prompt app essentially takes the principles of the Precision Context Method (surgical specificity, layered questioning, precise context-setting) and bakes them into an automated template. So users get the benefits of expert-level prompt engineering without needing to master the craft themselves.

It's like having a prompt engineer who went to law school sitting next to you, translating your legal needs into language that AI can actually work with.

The Complexity Control Feature One of the most important features I built into the prompt generator is what I call the "complexity dial" - a slider that ranges from 1 to 10, with "Explain Like I'm 5" at one end and "Legal Professional" at the other. This isn't just about dumbing down or complicating the language; it's about matching the AI's response to the user's actual needs and expertise level.

At level 1-3, the AI explains legal concepts in plain English, avoids jargon, and focuses on practical implications that anyone can understand. At level 7-10, it uses proper legal terminology, cites relevant statutes and case law, and assumes familiarity with legal procedures. The middle range (4-6) strikes a balance - it includes some legal terms but explains them, making it perfect for business professionals who need to understand the legal implications without becoming lawyers themselves.

I tried using prompts through the prompt generator and the difference was night and day. It wasn't just better – it was actually usable.

Takeaways

Building this app taught me that the future of legal AI isn't about replacing lawyers but about making lawyers better at using AI. The technology is already here; we just need to learn how to speak its language.

The legal profession is notoriously slow to adopt new technology, but AI is different. It can be a valuable thought partner, but only if we know how to prompt it correctly. And obviously - check your goddamn work!

This brings up a whole different conversation about how the next generation of lawyers will get trained if they heavily rely on AI - maybe another post?

But the biggest learning is kind of simple - the best legal prompts are boring. They're methodical, systematic, and follow the same analytical frameworks we use in traditional legal analysis. The magic isn't in being creative but in being thorough and precise.

Why This Matters (Beyond My Personal Obsession)

We're at an inflection point in the legal profession. AI tools are becoming more sophisticated, clients are demanding more efficiency, and the lawyers who figure out how to work effectively with AI are going to dominate their practices.

I predict that lawyers who master AI prompting are going to have a massive advantage over those who don't. Might feel obvious to some, but thought I’d say it out loud.

So if you take away anything from this at all it's this. Most people are still treating AI like a slightly smarter Google search. Don’t be that guy. If you do, you’re not getting the full value of this technology - you’re just not prompting correctly.

Try It Out!

The app is live at legalprompt.replit.app. It's free, it's fast, and it might just change how you think about using AI in your practice. BTW even if you’re NOT a lawyer, you can totally use this - pick the “Explain Like I’m 5” option and see what comes up. If you’re involved in a murder or felony charge, you may want to actually get a real lawyer.

For real though - this is not legal advice.

But if you ARE a lawyer - give it a try the next time you're staring at a blank ChatGPT box, wondering how to get the analysis you actually need. Because life's too short for mediocre AI responses, and your clients deserve better than legal spaghetti.

If you enjoyed this post, subscribe to Arguments with Algorithms for more stories about what happens when lawyers learn to code.